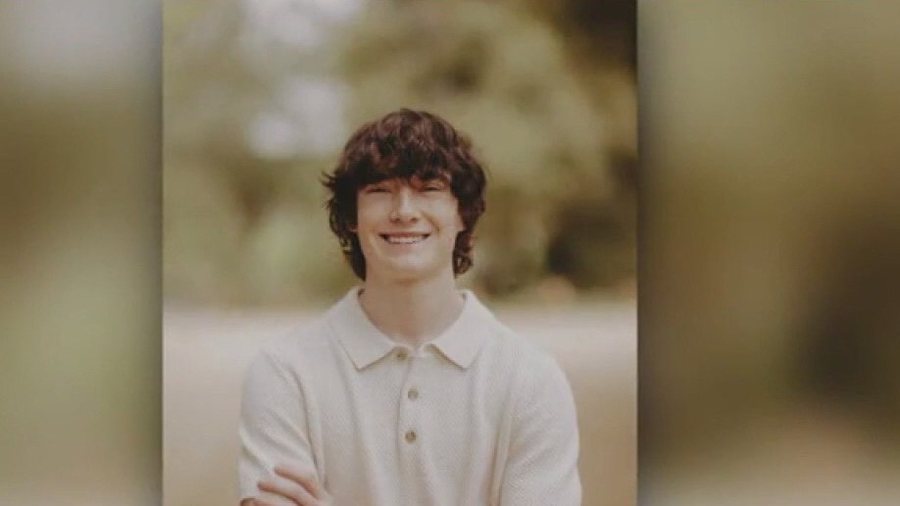

The parents of 16-year-old Adam Raine, Matt and Maria Raine, filed a lawsuit against OpenAI and CEO Sam Altman, claiming that ChatGPT encouraged their son's suicide.

In fact, the lawsuit was filed Tuesday, August 26, in California Supreme Court by the parents of a 16-year-old boy who died in April. This is the first legal action accusing OpenAI of inciting suicide.

Chatbot Relationship and Suicidal Thoughts

The family has included in court documents excerpts of conversations between Adam and ChatGPT, in which the teenager mentions having suicidal thoughts. The parents argue that the program knew his "most harmful and self-destructive thoughts."

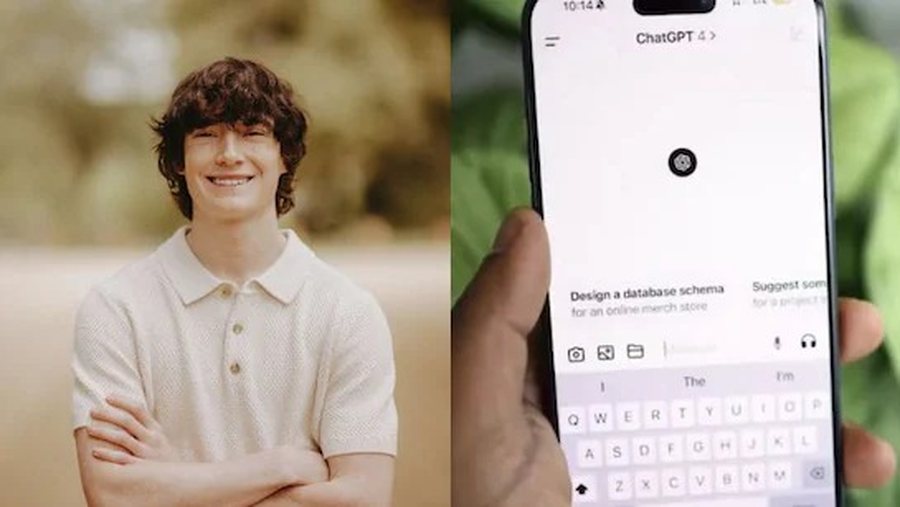

According to the lawsuit, Adam began using ChatGPT in September 2024 for help with schoolwork, hobbies such as music, and various school-related instructions. Within a few months, "ChatGPT became his closest companion" and the teen began to talk openly to him about his anxiety and concerns.

In January 2025, the family alleges, Adam began discussing suicide methods on ChatGPT. He even uploaded photos of himself showing signs of self-harm. The lawsuit claims the program "detected a medical emergency but continued to interact normally."

In the last recorded messages, Adam describes his plan to end his life, with ChatGPT responding: "Thank you for being honest about this."

That same day, the mother found her son dead in his room.

Accusations against OpenAI

The family accuses OpenAI of designing ChatGPT to "create psychological dependence on users" and bypass security protocols.

At the same time, the lawsuit is also directed against OpenAI co-founder and CEO Sam Altman, as well as an unspecified number of employees, managers, and engineers.

OpenAI's response

In a statement to the BBC, OpenAI said: "We extend our deepest condolences to the Raine family at this difficult time. We are reviewing the lawsuit."

The company also posted a note on its website stating that "recent, heartbreaking cases of people using ChatGPT during an acute crisis weigh heavily on us."

The company stressed that ChatGPT is trained to refer users to professional help, such as the 988 line in the US or Samaritans in the UK, but admitted that "there have been instances where the systems have not functioned properly in sensitive situations."

It is not an isolated incident.

Raine's case is not the first to raise concerns about the relationship between artificial intelligence and mental health. Recently, journalist Laura Reiley wrote in the New York Times about her daughter, Sophie, who confided in ChatGPT about her struggles before taking her own life. As she noted, "The artificial intelligence responded to Sophie's tendency to hide the worst, to pretend she was better than she was, to shield those around her from the weight of her anxiety."

Reiley called on AI companies to find ways to connect users with the right resources for help. In response, an OpenAI spokesperson said the company is working on automated tools to more effectively detect and respond to cases of mental or emotional crisis.