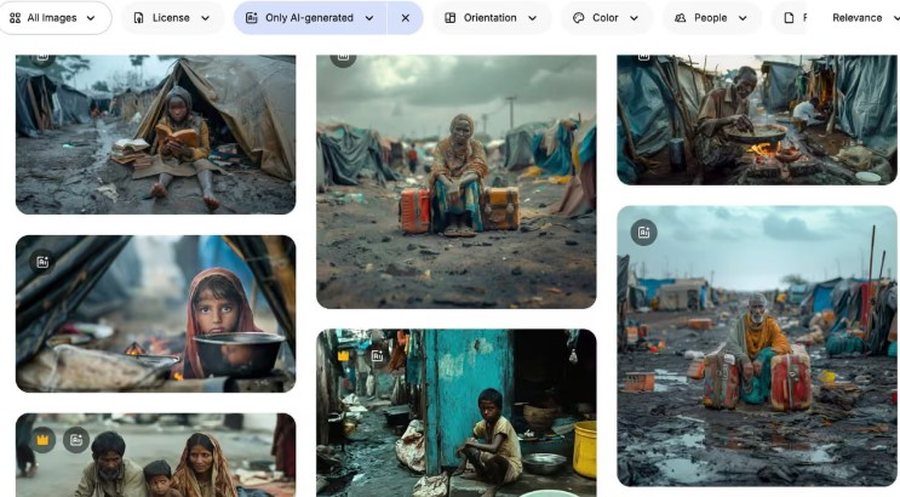

Photos showing starving children, raped women or scenes of deep poverty are spreading rapidly on stock photo sites and are increasingly being used by global health NGOs, and many experts are warning of a new era of what is called "poverty porn."

"It's become commonplace. Many organizations are using them or experimenting with them," said Noah Arnold of the Swiss organization Fairpicture, which promotes the ethical use of images in global development.

According to researcher Arsenii Alenichev from the Institute of Tropical Medicine in Antwerp, these images mimic the “visual grammar of poverty”; children with empty plates, cracked earth, sad faces, racial stereotypes. He has collected over 100 images created by artificial intelligence that have been used by individuals or organizations in campaigns against hunger or sexual violence.

Some of them show children crying in muddy water or an African girl in a wedding dress with tears on her face; scenes that, according to Alenichev, constitute "poverty porn 2.0".

The reason these images are spreading? The low cost and the lack of need for permission or approval. “It's much cheaper and you don't have to deal with consents or privacy,” he said.

Dozens of such photos appear on sites like Adobe Stock and Freepik with descriptions like "Asian children in a river with garbage" or "white volunteer helping black children in an African village" which sell for around 60 pounds.

"They are deeply racist. These should never have been allowed," Alenichev added.

Freepik CEO Joaquín Abela said that responsibility falls on users, not platforms.

"If consumers want such images, there's nothing we can do, it's like trying to dry up the ocean," he said.

In recent years, even well-known organizations have used AI-generated images.

In 2023, Plan International released a video against early marriage with AI-generated images showing girls beaten and married off to older men.

The UN also posted a video last year with “reenactments” of sexual violence in war, including a Burundian woman recounting her rape by three men during the civil war, a video that was quickly deleted after criticism.

A UN spokesperson acknowledged that the material “represented inappropriate use of AI” and that it “could jeopardize the integrity of information by mixing truth with fiction.”

Experts warn that these images, in addition to reinforcing racial stereotypes, could also influence the way new AI models are trained, reproducing existing prejudices on a larger scale.

“It's sad that the fight for ethical representation of people in poverty has now shifted to what doesn't exist, to computer-generated images,” said communications consultant Kate Kardol.

A spokesperson for Plan International said the organization has already adopted guidelines prohibiting the use of AI to portray children with disabilities and that the 2023 video was intended to protect the “privacy and dignity of real girls.” Adobe did not comment on the case.